Why I am called BlobEater

Saturday Reading 2016-12-17

It was my office Xmas party last night so whilst recovering on my settee, I’ll be reading:-

The Most Important Role of a SQL Server DBA

Back to basics, Angela Tidwell talks about what the most important task of a DBA is (can you guess?)

Don’t blink you might READPAST it

Cool post about the query hint READPAST

The ambiguity of the ORDER BY in SQL Server

Klaus Aschenbrenner talks about quirks with the ORDER BY clause

Evernote’s new privacy policies

So Evernote’s new privacy policies basically say that its employees can view your notes, yea…

Going to take a break over the holiday period so have a good Xmas and see you in the New Year.

Thanks for reading.

How to Use OR Condition in CASE WHEN Statement? – Interview Question of the Week #103

Question: How to Use OR condition in CASE WHEN Statement?

Answer: I personally do not like this kind of questions where user is tested on their understanding of the tricky words rather than SQL Server knowledge. However, we find all the kinds of people in the real world and it is practically impossible to educate everyone. In this blog post we will see a question asked for one of my friends in the interview about How to use OR condition in a CASE WHEN statement.

It is practically not possible to use OR statement in CASE statement as the structure of the CASE statement is very different. Here are two different ways how you can use different conditions in the CASE statement.

Method 1: Simple CASE Expressions

A simple CASE expression checks one expression against multiple values. Within a SELECT statement, a simple CASE expression allows only an equality check; no other comparisons are made. A simple CASE expression operates by comparing the first expression to the expression in each WHEN clause for equivalence. If these expressions are equivalent, the expression in the THEN clause will be returned.

DECLARE @TestVal INT SET @TestVal = 3 SELECT CASE @TestVal WHEN 1 THEN 'First' WHEN 2 THEN 'Second' WHEN 3 THEN 'Third' ELSE 'Other' END

Method 2: Searched CASE expressions

A searched CASE expression allows comparison operators, and the use of AND and/or OR between each Boolean expression. The simple CASE expression checks only for equivalent values and can not contain Boolean expressions. The basic syntax for a searched CASE expression is shown below:

DECLARE @TestVal INT SET @TestVal = 5 SELECT CASE WHEN @TestVal <=3 THEN 'Top 3' ELSE 'Other' END

Here are two related blog posts on this subject:

SQL SERVER – CASE Statement/Expression Examples and Explanation

SQL SERVER – Implementig IF … THEN in SQL SERVER with CASE Statements

Reference: Pinal Dave (http://blog.SQLAuthority.com)

First appeared on How to Use OR Condition in CASE WHEN Statement? – Interview Question of the Week #103

SQL SERVER – CREATE OR ALTER Supported in Service Pack 1 of SQL Server 2016

There are many enhancements which were introduced in Service Pack 1 of SQL Server 2016. If you are a developer, then you can easily understand this productivity improvement. Does below code looks familiar to you? Let us learn about CREATE OR ALTER Supported in Service Pack 1 of SQL Server 2016.

There are many enhancements which were introduced in Service Pack 1 of SQL Server 2016. If you are a developer, then you can easily understand this productivity improvement. Does below code looks familiar to you? Let us learn about CREATE OR ALTER Supported in Service Pack 1 of SQL Server 2016.

IF EXISTS (SELECT NAME FROM SYSOBJECTS WHERE NAME = 'sp_SQLAuthority' AND TYPE = 'P') BEGIN DROP PROCEDURE sp_SQLAuthority END GO CREATE PROCEDURE sp_SQLAuthority (@Params ...) AS BEGIN ... END GO

So, if you are deploying any script to SQL Server and you are not aware of the situation in production, whether a stored proc was already previously deployed or not. Developer need to put a check and drop if it’s already there and then create it.

Oracle has been having CREATE or REPLACE from a long time and there have been feedbacks given to the Microsoft SQL product team to do something about it.

CREATE OR ALTER statement

Create or Replace xxx

Finally, they have heard it and introduced this change in Service Pack 1 of SQL Server 2016. Here are the few objects which would allow such syntax

- Stored procedures

- Triggers

- User-defined functions

- Views

Unfortunately, it would not work for Objects that require storage changes like table, index. It is not allowed for CLR procedure also.

Here is the usage

CREATE OR ALTER PROCEDURE usp_SQLAuthority AS BEGIN SELECT 'ONE' END GO

Do you think this is a good enhancement? I would highly encourage to read below blog on a similar feature. SQL Server – 2016 – T-SQL Enhancement “Drop if Exists” clause

Were you aware of this? Do you want me to blog about SP1 enhancement?

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER – CREATE OR ALTER Supported in Service Pack 1 of SQL Server 2016

SQL SERVER – CLONEDATABASE: Generate Statistics and Schema Only Copy of the Database

I have been writing about how some of the interesting enhancements made with SQL Server 2016 SP1 have caught my attention. This one is a killer feature that can be of great value when you are trying to do testing. Having said that, I also want to call out that this feature has been added with SQL Server 2014 SP2 too. When you get into performance problems related to the query optimizer, then this capability will be of great advantage. In this blog post we will learn about Generate Statistics and Schema Only Copy of the Database.

To get into the specifics on how the command would look like:

The text is shown below for reference:

Database cloning for ‘AS_Sample’ has started with target as ‘My_Sample_mybkp’.

Database cloning for ‘AS_Sample’ has finished. Cloned database is ‘My_Sample_mybkp’.

Database ‘My_Sample_mybkp’ is a cloned database.

A cloned database should be used for diagnostic purposes only and is not supported for use in a production environment.

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

Once the command is run, this creates a new database using the same file layout, but using the size of the model database. Fundamentally a read-only database is created using an internal snapshot mechanism. In addition to it, the schema for all objects are created as copies along with the statistics of all indexes from the source database.

The object explorer after the database is created would look like:

To illustrate the actual database size is different from the original database, you can see from the below image. Here the original database data files are close to 600MB while the cloned database is just 16MB.

If you select the table’s data, you will see there is no data in these tables on the Cloned database. This is fundamentally because we have copied just the schema and statistics – not the data.

To check if a selected database is a clone or not, use the following database properties:

SELECT DATABASEPROPERTYEX ('My_Sample_mybkp', 'IsClone')

GOIf the command returns a 1, it means the database is a clone. Any other value of NULL means this is not a cloned database.

As I wrap up this blog, I would like to understand if you will be using this feature in your environment? What is your use case for the same? Do let me know via comments below.

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER – CLONEDATABASE: Generate Statistics and Schema Only Copy of the Database

SQL Server Identity Values Check on GitHub

Last year I blogged about a script that Karen López (blog | @datachick) and I wrote together to help you determine if you were running out of identity values.

The post SQL Server Identity Values Check on GitHub appeared first on Thomas LaRock.

If you liked this post then consider subscribing to the IS [NOT] NULL newsletter: http://thomaslarock.com/is-not-null-newsletter/

Azure SQL Data Warehouse with Improved Loading and Monitoring in Azure Portal and SSMS

Read more https://winbuzzer.com/2016/12/20/microsoft-updates-azure-sql-data-warehouse-improved-loading-monitoring-azure-portal-ssms-xcxwbn/.

SQL Server – PowerShell Script – Getting Properties and Details

I write and play around a lot with SQL Server Management Studio and I love working with them. Having said that, I also explore the ways people want to run code to achieve certain objectives. In the DBA’s world when the number of servers we are talking is not a single digit, they are looking for ways to automate and script out. Recently I was at a retail company backend team in India and they said they have close to 1500+ databases which are running at their various outlets and point of sale counters and the DBA team working to manage these were still less than 10. I was pleasantly surprised by this level of details. In this blog post we will learn about Powershell Script.

Either the people managing the environment are too good or the product SQL Server is very good. I tend to understand it must be both. Coming back to this blog post, this was inspired by one of them. They told me they run patch management, etc and use powershell scripts to find if the databases are at the latest.

I am not going to say much about the work they did, I went about exploring some options with Powershell and in that pursuit I figured out that Powershell can give you more information than you guess. Below is a simple script that gives you information from SQL Server properties that are of value. Some of the nice information that can be got is around default backup location, Collation, Errorlogpath, Edition of server, databases involved, Case sensitive, logins, memory, logical processors etc. This is exhaustive.

Import-Module SQLPS -DisableNameChecking

#Run below command in sequence in powershell under Admin mode to get the detail information about the instance. Replace localhost with servername. $server = New-Object -TypeName Microsoft.SqlServer.Management.Smo.Server -ArgumentList localhost $server | Get-Member | Where {$_.MemberType -eq "Property" -and $_.Name -ne "SystemMessages"} | select Name, @{Name="Value";Expression={[string]$server.($_.Name)}} A typical output for the same would look like:

As I wrap up this blog, please let me know if you have some interesting script usecases you want to share? I would be more than happy to blog around those scenario’s too. Let me know via comments below. Please do share your favorite powershell script with me via email and I will be happy publish it with due credit.

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL Server – PowerShell Script – Getting Properties and Details

That Time We Fixed Prod Without Admin Credentials

Merry Christmas readers! It’s story time. This is about a problem I encountered a few weeks ago. We were looking at a production site using sp_whoisactive and we noticed a lot of blocking on one particular procedure. I’m going to explain how we tackled it.

In this case, I think it’s interesting that we were able to mitigate the problem without requiring sysadmin access.

The Symptoms

Using sp_whoisactive and other tools, we noticed several symptoms.

- SQLException timeout errors were reported when calling one procedure in particular.

- Many sessions were executing that procedure concurrently. Or at least they were attempting to.

- There was excessive blocking and the lead blocker was running the same procedure.

- The lead blocker had been running the longest (about 29 seconds)

- The blocking was caused by processes waiting on

Sch-Mlocks for a table used by that query

Here’s what was going on:

SQL Server was struggling to compile the procedure in time and the application wouldn’t let it catch its breath. The query optimizer was attempting to create statistics automatically that it needed for optimizing the query, but after thirty seconds, the application got impatient and cancelled the query.

So the compilation of the procedure was cancelled and this caused two things to happen. First, the creation of the statistics was cancelled. Second, the next session in line was allowed to run. But the problem was that the next session had already spent 28 seconds blocked by the first session and only had two seconds to try to compile a query before getting cancelled itself.

The frequent calls to the procedure meant that nobody had time to compile this query. And we were stuck in an endless cycle of sessions that wanted to compile a procedure, but could never get enough time to do it.

Why was SQL Server taking so long to compile anyway?

After a bunch of digging, we found out that a SQL Server bug was biting us. This bug involved

- SQL Server 2014

- Trace flag 2389 and 2390

- Filtered Indexes on very large base tables

Kind of a perfect storm of factors that exposed a SQL Server quirk that caused long compilation times, timeouts and pain.

What We Did About It

Well, in this case, I think that the traceflag 2389, 2390 kind of outlived its usefulness. So the main fix for this problem is to get rid of those traceflags. But it would be some time before we could get that rolled out.

So for the short term, we worked at getting that procedure compiled and into SQL Server’s cache.

We called the procedure ourselves in Management Studio. Our call waited about thirty seconds before it got its turn to run. Then it spent a little while to compile and run the procedure. Presto! The plan is in the cache now! And everything’s all better right? Nope. Not quite. The timeouts continued.

If you’ve read Erland Sommarskog’s Slow in the Application, Fast in SSMS you may have guessed what’s going on. When we executed the procedure in SSMS, it was using different settings. So the query plan we compiled couldn’t be reused by the application. Remember, all settings (including ARITHABORT) need to match before cached plans can be reused by different sessions. We turned ARITHABORT off in SSMS and called the procedure again.

After a minute, the query completed and all blocking immediately stopped. Whew! The patient was stable.

The whole experience was a pain. And an outage is an outage. Though the count of the snags for the year had increased …

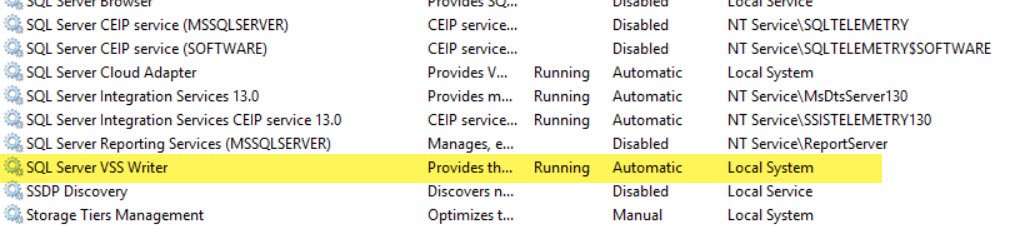

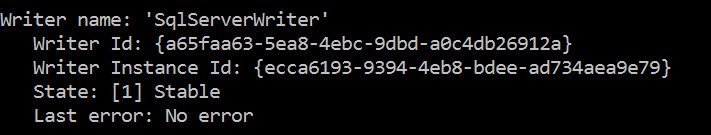

SQL SERVER – SqlServerWriter Missing from an Output of VSSadmin List Writers Command

One of a DBA from my client contacted me for a quick suggestion when I was working with them on a performance tuning exercise. I always ask for some time to explain the exact problem and behavior. As per the DBA, they are using 3rd party backup solutions to take backups and SQL backup are not happening. When they contacted 3rd party vendor they asked to run command VSSadmin List Writers and check if they have SqlServerWriter listed. Unfortunately, the component is missing from an output. He asked me the possible reasons. Since this was not a performance tuning related question and I promised that I would share whatever information I can find and write a blog post for that.

When I run it on one of my machine, I get below.

There are more Writers available which I am not showing in the image. The important point here is that the client was not seeing below, which I was able to see in my lab machine.

CHECKLIST

Based on my search on the internet, I found below possible causes.

- Make sure that the SQL Writer Service is installed.

![SQL SERVER - SqlServerWriter Missing from an Output of VSSadmin List Writers Command vss-writer-03 SQL SERVER - SqlServerWriter Missing from an Output of VSSadmin List Writers Command vss-writer-03]()

If it’s not started, then start it. If it’s not available, then we need to install it from SQL installation media.

- Check Event logs and look for errors.

- Make sure that no database in the instance has trailing space in the name.

- Make sure service account for SQLWriter is able to connect to ALL instances on the machine.

WORKAROUND / SOLUTION

I was informed by my client that they had permission related issue and after changing the service account of SQL Server they could see the correct output and backups also started working.

Have you seen similar issue and found any solution other than what I listed earlier?

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER – SqlServerWriter Missing from an Output of VSSadmin List Writers Command

Point in Time Recovery with SQL Server

http://www.sqlshack.com/point-in-time-recovery-with-sql-server

What is Memory Grants Pending in SQL Server? – Interview Question of the Week #103

Question: What is Memory Grants Pending in SQL Server?

Answer: Very interesting question and this subject is so long, it will be difficult to cover in a single blog post. I will try to answer this question in just 200 words as usual in the interview, we have only a few moments to give the correct answer.

Memory Grants Pending displays the total number of SQL Server processes that are waiting to be granted workspace in the memory.

In the perfect world the value of the Memory Grants Pending will be zero (0). That means, in your server there are no processes which are waiting for the memory to be assigned to it so it can get started. In other words, there is enough memory available in your SQL Server that all the processes are running smoothly and memory is not an issue for you.

Here is a quick script which you run to identify value for your Memory Grants Pending.

SELECT object_name, counter_name, cntr_value FROM sys.dm_os_performance_counters WHERE [object_name] LIKE '%Memory Manager%' AND [counter_name] = 'Memory Grants Pending'

Here is the result of the above scripts:

If you have consistently value of this setting higher than 0, you may be suffering from memory pressure and your server can use more memory. Again, this is one of the counters which indicates that your server can use more memory.

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on What is Memory Grants Pending in SQL Server? – Interview Question of the Week #103

SQL SERVER – FIX – Error – Msg 4928, Level 16, State 1. Cannot Alter Column Because it is ‘Enabled for Replication or Change Data Capture’

One of my Facebook followers sent me an interesting situation, where he faced error related replication or change data capture.

One of my Facebook followers sent me an interesting situation, where he faced error related replication or change data capture.

Hi Pinal,

We have a database in our QA environment which is restored from Production (we have replication set up in Production). In QA environment, we don’t have any replication or Change Data Capture (CDC). When we are trying to rename one of the fields in the table in the Lab and got the following error:

--Rename column EXEC sp_rename 'dbo.Foo.Bar', 'Bar1', 'COLUMN'; GO

Msg 4928, Level 16, State 1, Procedure sp_rename, Line 611

Cannot alter column ‘Bar’ because it is ‘enabled for Replication or Change Data Capture’.

I have verified the following:

- None of the tables have is_tracked_by_cdc = 1.

- None of the tables or columns have is_replicated = 1

- Replication was already disabled by using sp_removedbreplication.

Any ideas?

Thanks in advance.

John S.

This was not a pretty situation because the error message is not in line with the data points which they have already verified. I strongly felt that somewhere they have metadata incorrect.

Based on my search on the internet, I found that below has helped few of the others.

execute sp_replicationdboption 'DBName','Publish','False',1

But it didn’t work. So, I thought of playing with CDC by enabling and disabling again because this was a restored copy of the production database.

WORKAROUND/SOLUTION

I have provided below script to enable and disable CDC one more time on the table.

USE YOUR_DATABASE_NAME GO EXEC sys.sp_cdc_enable_db GO EXEC sys.sp_cdc_enable_table @source_schema = N'dbo' ,@source_name = N'foo' ,@role_name = NULL ,@filegroup_name = N'primary' ,@supports_net_changes = 1 GO EXEC sys.sp_cdc_disable_table @source_schema = N'dbo' ,@source_name = N'foo' ,@capture_instance = N'ALL' GO EXEC sys.sp_cdc_disable_db GO

As soon as the above was done, we could rename the column.

Have you encountered any such weird situations where the error message was not correct due to metadata problem?

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER – FIX – Error – Msg 4928, Level 16, State 1. Cannot Alter Column Because it is ‘Enabled for Replication or Change Data Capture’

How to Find Number of Times Function Called in SQL Server? – Interview Question of the Week #104

Question: How to Find Number of Times Function Called in SQL Server?

Answer: This is not a real interview question, but it was asked to me during my performance tuning consultancy recently. We were troubleshooting a function which was called quite a lot and creating a huge CPU spike. Suddenly, Senior DBA asked me if there is any way we can know more details about these functions like how many times the function was called as well as what is the CPU consumption as well as IO consumption by this function. I personally think it is a very valid question.

In SQL Server 2016, there is a new DMV dm_exec_function_stats which gives details related to function execution since the last service restarts. This query will only work with SQL Server 2016 and later versions. Let us see a quick query when executed it will give us details like total worker time, logical read as well as elapsed time for the query. I have ordered this query on average elapsed time, but you can easily change the order by to your preferred order.

SELECT TOP 25 DB_NAME(fs.database_id) DatabaseName, OBJECT_NAME(object_id, database_id) FunctionName, fs.cached_time, fs.last_execution_time, fs.total_elapsed_time, fs.total_worker_time, fs.total_logical_reads, fs.total_physical_reads, fs.total_elapsed_time/fs.execution_count AS [avg_elapsed_time], fs.last_elapsed_time, fs.execution_count FROM sys.dm_exec_function_stats AS fs ORDER BY [total_worker_time] DESC;

Note:This script only works in SQL Server 2016 and later version. Do not attempt to run this on earlier versions of SQL Server, or you will get an error.

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on How to Find Number of Times Function Called in SQL Server? – Interview Question of the Week #104

SQL SERVER 2016 – Trace Flag 1117 is Discontinued. Use the Options Provided with ALTER DATABASE

While doing performance tuning for one of my clients, I was looking at ERRORLOG files to understand about their system. I saw that trace flag 1117 and 1118 are enabled via startup parameter.

2016-12-05 06:02:14.440 Server Microsoft SQL Server 2016 (SP1) (KB3182545) – 13.0.4001.0 (X64)

2016-12-05 06:02:14.440 Server Registry startup parameters:

-T 1117

-T 1118

-d C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\DATA\master.mdf

-e C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Log\ERRORLOG

If you have not heard, then all I would say is that these trace flags are for TempDB optimization. You can read more about them in below articles.

- SQL Server 2016 – Enhancements with TempDB

- SQL Server 2016 – Introducing AutoGrow and Mixed_Page_Allocations Options – TraceFlags

While looking further, I also saw below messages in ERRORLOG

2016-12-05 06:02:14.440 Server Trace flag 1117 is discontinued. Use the options provided with ALTER DATABASE.

2016-12-05 06:02:14.440 Server Trace flag 1118 is discontinued. Use the options provided with ALTER DATABASE.

This was a good piece of advice which was given by the SQL Server engine because these trace flags are not having any use and we need to control them via database options ALTER command.

Did you know about this message in ERRORLOG? Well, I think you must check your SQL Server 2016 to see if you are using this discontinued traceflag or not? If you are using this traceflags, you can disable them in SQL Server 2016.

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER 2016 – Trace Flag 1117 is Discontinued. Use the Options Provided with ALTER DATABASE

16 Things That Didn’t Happen to Me in 2016

It’s that time of year again. The time when we find many “year-2016-in-review”, “what-to-expect-when-you’re-expecting-2017”, and “resolutions-you-will-not-follow-in-2017” articles littered about the series of tubes known as the internet.

The post 16 Things That Didn’t Happen to Me in 2016 appeared first on Thomas LaRock.

If you liked this post then consider subscribing to the IS [NOT] NULL newsletter: http://thomaslarock.com/is-not-null-newsletter/

SQL SERVER – Why Does Encrypted Column Show NULL on Subscriber?

While I was online on Skype last night, one of my old customers pinged me and wanted to know my opinion of a special issue which he was facing when Encrypted Column Show NULL on Subscriber.

He said that they have a replication setup between SQL Server 2016 instances. One of the servers is the subscriber. They have a table that is part of the publication where a column level encryption is used with symmetric key. As per him the replication is working fine, but the encrypted column is showing as NULL when they run queries on subscriber database.

I searched on the internet and shared this article with them. How to: Replicate Data in Encrypted Columns (SQL Server Management Studio

As per my understanding, in order for column level encryption to work in replication topology, at the subscriber, we need to execute CREATE SYMMETRIC KEY using the same values for ALGORITHM, KEY_SOURCE, and IDENTITY_VALUE which was created at publisher.

A symmetric key is uniquely identified using the Key_guid property of the key. When the same Key_source and Identity_value are used, we will get the same Key_guid regardless of the server where the symmetric key is created.

So, we checked the Key_guid property of the symmetric Keys by running sys. symmetric_Keys on both server and found that Key_guid property was NOT the same.

SELECT name, key_algorithm, key_guid FROM sis. symmetric_Keys

As we can see above value is E9CCD100-1A77-55E7-4D47-9F7B93A45611

On the subscriber value was 14C25100-DC28-6D3F-67E2-809F9FB441AF

The above means that the symmetric key that was used to encrypt the data on the publisher is different from the one we are using to decrypt on the destination. This explained the behavior which they were seeing?

Later, when we checked the script, the value was different for Identity_value while creating symmetric key and that’s why Key_guid was not matched.

SOLUTION

Based on the above analysis, it was clear that symmetric key was not created correctly on the subscriber. Once we followed article correctly, we were able to see data on subscriber as well.

How to: Replicate Data in Encrypted Columns (SQL Server Management Studio

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER – Why Does Encrypted Column Show NULL on Subscriber?

Looking Back At 2016

When this blog started back in 2011 I had no idea that it would continue to grow to where it has gotten to today. There have been many learning curves along the way – some fun and some not so fun, but that is part of the journey.

When this blog started back in 2011 I had no idea that it would continue to grow to where it has gotten to today. There have been many learning curves along the way – some fun and some not so fun, but that is part of the journey.

Each year since inception this blog has steadily gained steam in terms of increased traffic each year ; so I am thankful for that. The SQL Professor was not started for that sole purpose though. Rather it was a place to keep my thoughts regarding issues, ideas I have or have ran across over a period of time, and to write about happenings in my own professional career. Getting feedback from, you the reader, is an added bonus. Receiving both good and bad comments and discussions is an integral piece in helping this blog to continue to morph and grow – a living document if you will.

I’m reminded often that while producing good content is key; it is also key to write about what you love and enjoy doing. SQL has been a big part of my professional career and I look forward to creating additional blog posts in that area. Another area that I’ve come to have a strong passion for is leadership. In 2017 I plan on perhaps producing a series on leadership and how it pertains to one’s career.

Looking Back

Nope; not doing that. While 2016 was a great ride professionally I want to set sights on 2017 and continue to hopefully make an impact.

Looking Ahead

In looking ahead there are several things on tap for 2017. Some of them are:

- Continue to grow the User Group base here locally.

- Continue to help and grow the SQL Saturday event here locally.

- Expand speaking opportunities where it makes sense.

- Continue to help as much as I can with the HA/DR virtual chapter.

- Dive further into PASS and continue to help the organization that I’ve come to be strongly passionate about.

- Continue to lead and build a strong DBA team here in the current shop (very talented individuals. Extremely blessed to have an outstanding team).

- Build on leadership qualities.

- Continue to do the best work I can for the SQL community.

- Continue to bui

Expectations

Something that I’ve learned long ago is that we can’t make everyone happy regardless of how hard we try. Mistakes will happen, and events will occur – that is part of the journey. I said the other day that 2016 slapped me in the face on various fronts, but I’m still standing. While it is important to reflect on what lessons were learned in 2016; I also want to encourage you to look forward to 2017. Change, if you want it, starts from within. Will you take the first steps? Break out of your comfort zone and explore new heights.

Here is to a solid end of 2016, and to new beginnings/adventures in 2017 ~ Cheers.

SQL SERVER – Cannot initialize the data source object of OLE DB provider “Microsoft.ACE.OLEDB.12.0” for linked server

As you might have seen, along with performance tuning related work, I also help community in getting answers to questions and troubleshoot issue with them. A few days ago, one of community leader and user group champion contacted me for assistance. He informed me that they are using Microsoft Access Database Engine 2010 provider to read data from an excel file. But they are seeing below error while doing test connection. Let us learn about error related to OLE DB provider.

As you might have seen, along with performance tuning related work, I also help community in getting answers to questions and troubleshoot issue with them. A few days ago, one of community leader and user group champion contacted me for assistance. He informed me that they are using Microsoft Access Database Engine 2010 provider to read data from an excel file. But they are seeing below error while doing test connection. Let us learn about error related to OLE DB provider.

Cannot initialize the data source object of OLE DB provider “Microsoft.ACE.OLEDB.12.0” for linked server “EXCEL-DAILY”.

OLE DB provider “Microsoft.ACE.OLEDB.12.0″ for linked server ” EXCEL-DAILY” returned message “Unspecified error”. (Microsoft SQL Server, Error: 7303)

This error appears only from the client machine, but test connection works fine when done on the server. The same error message is visible via SSMS.

EXEC master.dbo.sp_addlinkedserver @server = N'EXCEL-DAILY' ,@srvproduct = N'Excel' ,@provider = N'Microsoft.ACE.OLEDB.12.0' ,@datasrc = N'D:\EMPLOYEE_ATTEN_DATA.xlsx' ,@provstr = N'Excel 12.0; HDR=Yes'

After a lot of checking, we found that the account which was used from client and the server was different. So, I have captured Process Monitor for both working and non-working test connection. It didn’t take much time to see below in non-working situation.

sqlservr.exe QueryOpen C:\Users\svc_app\AppData\Local\Temp ACCESS DENIED

WORKAROUND/SOLUTION

So, we went to the SQL Server machine and gave full permission to the file path which was listed in process monitor as access denied. C:\Users\svc_app\AppData\Local\Temp.

After permission was given test connection worked and client machine could read data via excel using linked server.

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER – Cannot initialize the data source object of OLE DB provider “Microsoft.ACE.OLEDB.12.0” for linked server

SQL SERVER Puzzle – Conversion with Date Data Types

Over the years, every time I have worked with Date and time data types, I have learnt something new. There are always tons of tight corners where a number of exceptions happen. Though these are known, for someone who is new, this would take them by surprise and your design decisions can have a larger impact on the output that you are likely to get. These can cause a considerable business impact and compliance if we get stuff wrong. Let us see a puzzle about Date Data Types.

In this puzzle, let me show you some of the default behavior that SQL Server does when working with CAST. Below is an example wherein we take a simple conversion to a DATE.

As you can see, SQL Server went about doing the rounding and it gives the date as 2017-01-13. This seems to be something basic. The puzzle was not around this output.

Task 1

What do you think is the output of the below query? Is it going to be:

SELECT CAST ('2017-1-13 23:59:59.999' AS SMALLDATETIME)Output:

- 2017-01-13 23:59:00

- 2017-01-13 00:00:00

- 2017-01-14 00:00:00

- Error

What do you think is the answer? Why do you think this is the answer?

Task 2

If you got the Task 1 correct, I think you know a lot about working with datetimes. Having said that, what would be the output for the below:

SELECT CAST('2017-1-13 23:59:59.999999999' AS SMALLDATETIME)Output:

- 2017-01-13 23:59:00

- 2017-01-13 00:00:00

- 2017-01-14 00:00:00

- Error

Did Task 1 help you? How did you guess it? Why do you think you got this output in task 2?

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER Puzzle – Conversion with Date Data Types